One of the more common statements for the 8-bit vs. 10-bit argument is this: with 8-bit you get 16.7 million colours and 10-bit you get 1.1 billion colours.

Below is a brief explanation that aims to do define where those numbers come from, in as simple a way possible.

8-bit colour: each pixel contains 8 bits for each primary colour (R, G, B). There are 256 8-bit binary numbers, therefore 256 shades of each binary colour ('bit' is literally short for binary digit, so you would arrive at 256 simply by 2^8). Multiply the three channels together and you get 16,777,216 colours (256^3).

10-bit colour: has the ability to contain 1,024 (2^10) colours/shades for each channel (R,G,B), which when combined becomes approximately 1.07 billion (1024^3). Imagine a super smooth colour gradient by comparison to the more noticeable difference in shades of 8-bit colour.

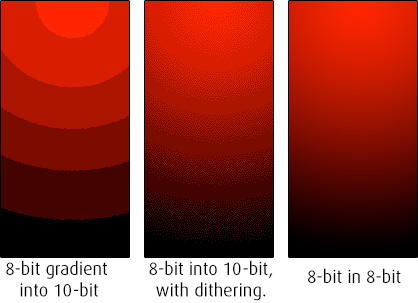

Image by Blackmagic Design. Be mindful of graphics such as these because they can be misleading and oversimplified. The representation above is an exaggeration of uncompressed 8-bit vs 10-bit in a 10-bit system.The graphic also subtly implies that the change could be horizontal only, which is not the case.

While not often visible to the naked eye, the difference can become substantial when subjected to post-production and future deliverables. There are also unique situations where 8-bit colour can create issues such as banding- most noticeably seen in a gradient. For example, you might have a bright light source shining against a wall with a flat colour- as the light falls off you get an obvious gradient. 8-bit can struggle to represent this accurately in a 10-bit display, due to the limited number of steps it can take between colour shades, whereas with 10-bit, there is more colour information readily available. There are other attributes that will affect the outcome of this image such as exposure, light intensity, etc., so it is also worth noting that we're not saying this will happen all the time.

Image: Wikipedia

8-bit into 10-bit occurs when 8-bit video is recorded on a 10-bit recorder, or if 8-bit is sent down HD-SDI, which is a 10-bit format. When 8-bit is fed into a 10-bit system, the last two bits are both zero. With dithering added to the 8 bits, these two lower bits are filled with random numbers, which appears as noise, but will eliminate the banding problem.